WWDC 2024 Keynote

The keynote video was a real mixed bag. But not a mixed bag like Starburst candies where there are only a few good ones and you’ll still eat the other ones. This is a mixed bag like Starburst candies, artisanal chocolate truffles, and dog turds, and the dog turds got all over everything else.

There’s really good, clever, ingenious stuff in there, but I had a viscerally negative reaction to the part of the keynote presentation I was most trepidatious about: AI. Even in the Apple Intelligence section there were decent features that could be helpful to use in the real world, but then there was the laziest, sloppiest stuff shoved in there too.

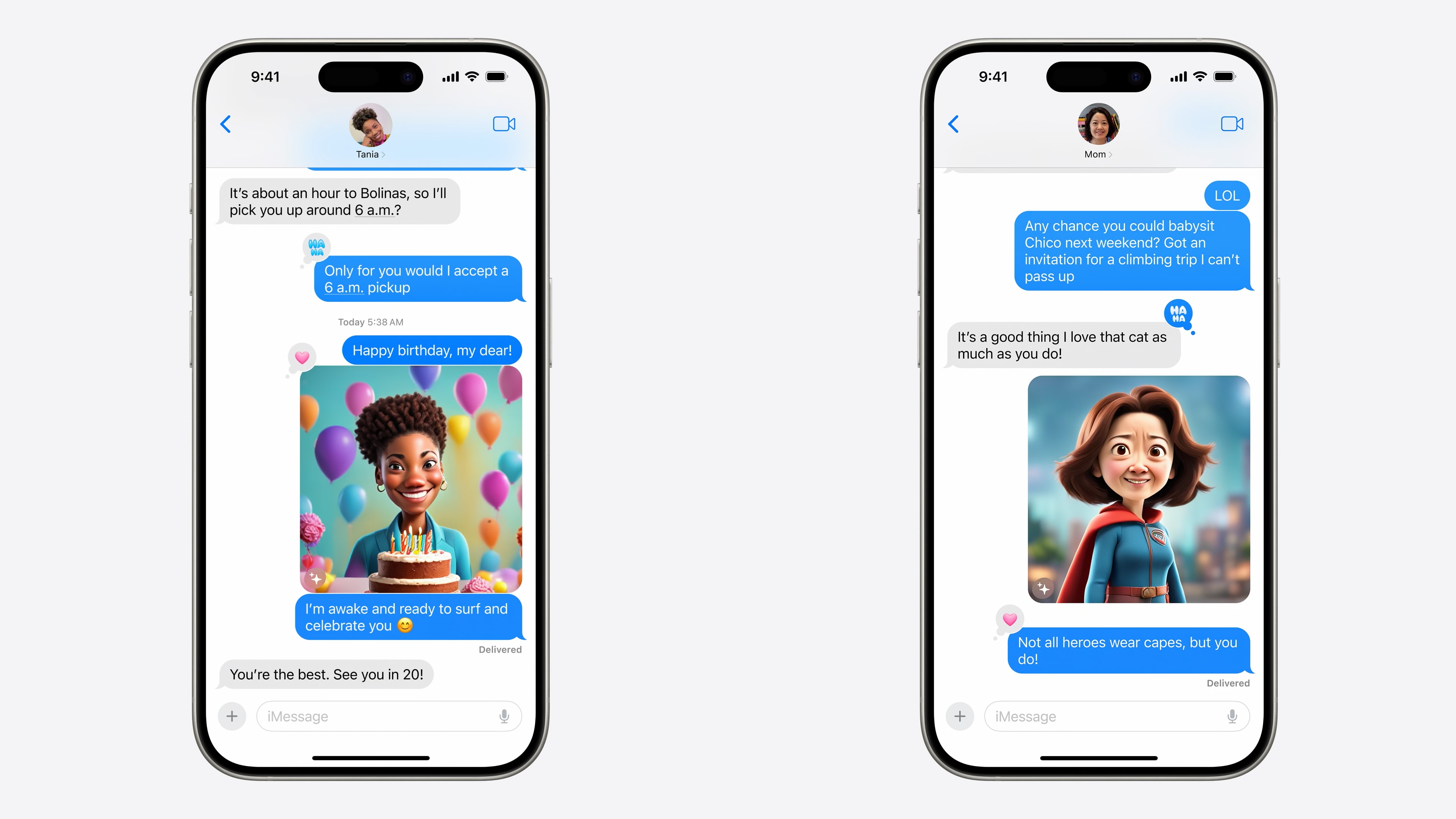

Unsurprisingly, my negativity is nearly all focused at generative image slop. The examples they showed were akin to the results of image generators from four years ago. The appeal, that these models would personally understand us, and our relationships, made it all the more alienating when applied to generative AI, and not schedules, or directions.

The first example: Wishing someone a happy birthday, caused such an intense revulsion that I tried for a moment to convince myself that this was a joke that they’d say they weren’t doing this because this literally looked like you were insulting the person you were wishing a happy birthday to. But no, they pushed on with an image of a “super mom” and I began to question the taste of everyone from that generative AI team on up through all the executive ranks.

That image looked nothing like the photo from Contacts of the mom in question. It looked distressingly “AI”, and had that weird, smeary not-violating-copyright Superman shield. Revolting.

There are people that are entertained by the output of generative AI models, but those people have access to those models already.

The sales pitch for Image Playgrounds, where people can select from a pool of seed images to graphically build an image is just a front for having the system generate the prompt for you. As if the greatest difficulty in image generation is writing. The absurdity that a project like this gets time and resources put into it is mind boggling to me.

Image Wand depressed me. There was a perfectly adequate sketch that was turned into a more elaborate —but not better— image. Another empty area of a presentation was circled and stuff was mushed in there too. Remember that this is, essentially, a substitute for clip art. And just like clip art, it’s superfluous and often doesn’t add anything meaningful.

Genmoji is quaint compared to the rest of this mess. It generates an image made of the corpses of other emoji artists’ work. It’s likely to be about as popular as Memoji. Unlike Memoji this can be applied in the new Tapback system with other emoji, even though it’s an image, so it might not suffer the sticker-fate of other features. God knows what it will render like when you use it in a group chat with Android users.

Clean Up is the only thing I liked. See, generative image stuff doesn’t have to be total sloppy shit! It can help people in a practical way! Hopefully, when people are rightfully criticizing generative slop they don’t lump this in.

As for the rest of “Apple Intelligence” it’ll need to get out the door and survive real world testing. It seems like there’s good stuff, mixed with bad stuff. The integration with ChatGPT is what I would categorize as “bad stuff” and I don’t appear to be alone in that emotional reaction to it. I don’t care if OpenAI is the industry leader, there’s no ethical reason why I would use their products, and Apple made no case about why they chose to partner with a company that has such troubled management other than OpenAI being the leader in the segment. Their ends-justify-the-means mentality seeming having justified the means.

Speaking of those means. Apple had an interview with Craig Federighi and John Giannandrea with Justine Ezarik (iJustine) asking them questions (a very controlled, very safe interview) and The Verge was in the audience for this with Nilay Patel, David Pierce, and Allison Johnson live blogging. Nilay:

What have these models actually been trained on? Giannandrea says “we start with the investment we have in web search” and start with data from the public web. Publishers can opt out of that. They also license a wide amount of data, including news archives, books, and so on. For diffusion models (images) “a large amount of data was actually created by Apple.”

David Pierce:

Wild how much the Overton window has moved that Giannandrea can just say, “Yeah, we trained on the public web,” and it’s not even a thing. I mean, of course it did. That’s what everyone did! But wild that we don’t even blink at that now.

The public web is intended for people to read, and view, and there are specific restrictions around reproduction of material from the public web. Apple, a company notoriously litigious about companies trying to make things that even look like what they’ve made, has built this into the generative fiber of the operating system tools they are deploying. I do not find that remotely reassuring, and isn’t better than what OpenAI has said that they are doing. [Update: Nick Heer wrote up the sudden existence of Applebot-Extended. Get in your time machines and add it to your robots.txt file.]

Apple did say they’ll ask before sending stuff to OpenAI, but it’ll also suggest sending to OpenAI. I very much hope there is a toggle to turn off Siri even suggesting it, but Siri suggests a lot of stuff you can’t turn off, so who can say for certain.

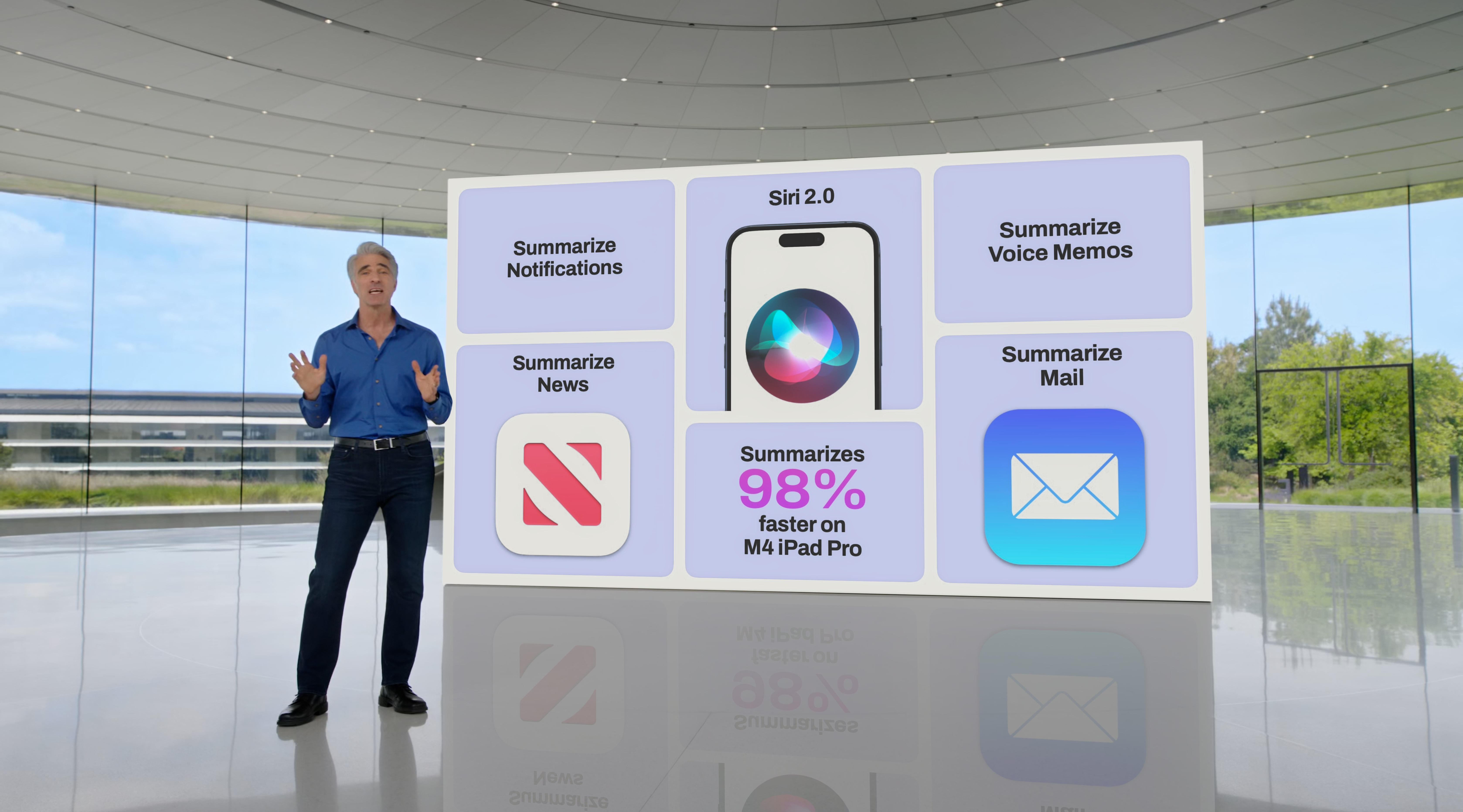

The other features regarding text are pretty much what I hoped they wouldn’t be. Summarizing. I wrote a blog post for Six Colors about what I hoped I wouldn’t see AI used for from WWDC, and they did all the things. It’ll write or rewrite things. It’ll summarize things. It’ll change tone. It’ll introduce spelling errors.

David Pierce, again:

Everyone has the same ideas about AI! Summarization, writing mediocre emails, improving fuzzy search when you don’t know exactly the keywords to use. That is EVERYONE’S plan for AI.

Notification prioritization could be something, but it still can’t filter out junk notifications from overzealous marketers abusing their app’s notifications privileges.

I’m not filled with joy by any of it, and that really smears the rest of the diligent and thoughtful work done by many other people at Apple with this shit stain of slop.

So then here’s a brief bit about cool stuff: I really like the idea of iPhone mirroring. I like revising notifications. I like continuity improvements. The new Mail for iOS stuff looks good. I’m cautiously optimistic about the new Photos app, even though I almost exclusively want the grid, and the current design makes it easy to ignore the terrible machine learning suggestions.

Math Notes — despite it’s eminently mockable name inspiring people shove it in a locker or give it a swirly — seems like such a neat idea that capitalizes on “Calculator for iPad” but makes it relevant to the iPad.

I was even surprised to see that tvOS will be getting features at all. The only feature announced that seems interesting is InSight, which is like Amazon Prime Video’s X-Ray feature that they’ve had forever. This is objectively good and I’m happy they did it. Unfortunately, it’s only for Apple TV+ shows.

The other changes, turning on captions when the TV is muted, or automatically when skipping back, are … fine.

Highlighting 21:9 format support for projectors made me furrow my brow, because that seems like a feature for a very, very, very specific and tiny group. It’s not bad that they did it, but just weird to brag about.

In a bizarre twist of fate, the iPadOS section of the keynote made a big deal about how important apps are and demoed the TV app for iPadOS to show off the Tab Bar, which is literally the old pill-shaped floating bar at the top of the TV app, and how it can turn into the Side Bar, which is the new UI element Apple introduced at the end of last year.

The Tab Bar can be customized with things from the Side Bar. This is supposed to be a design feature that third party developers should use, which is why it was demoed like this, but it did immediately make me question its absence in the tvOS portion that came right before this where the poor customization and layout is a problem.

Also, this addresses zero of the reservations iPadOS power users had. No updates on Files app, or multitasking, or the new tiled window controls shown for macOS. But it gets Tab Bars that turn into Side Bars as a new paradigm.

Speaking of media weirdness, I’ll circle back to the beginning of the presentation for visionOS 2. I still don’t have any plans to buy a headset of my own, so this part really didn’t peak my interest much. This is the yellow Starburst in the mixed bag.

It seems like there’s a lot of catchup still with generating media, and proposing tools for generating media. In my post suggesting what to do for Vision Pro content, I mentioned that Apple needs user-generated video, and that the largest source of user-generated stereo and immersive video is YouTube.

Which is why Apple announced that there would be a Vimeo app for Vision Pro this fall for creators to use.

Vimeo is the artsy version of YouTube, that’s been slowly dying for forever. It’s never totally died, which is good because I host my VFX demo reel there, but it’s not growing. They even killed their tvOS app, and most of their other TV apps, in June of 2023 and told affected customers that people should cast from their iPhone or Android phones, or use a web browser on their TV.

Naturally, Vimeo is the perfect partner for growing a first in class stereoscopic and immersive experience for video creators. Vimeo doesn’t seem to want what people think of as “creators” they want filmmakers that make artsy short films.

A huge flaw in that line of thinking is that Apple marketing increasingly relies on YouTubers to promote and review their products. There is a disconnect here. I don’t want a YouTube monopoly, because it leads to things like their tacky screensaver, but this doesn’t seem like a coherent plan for video content production.

Also they mentioned partnering with Blackmagic Design (makers of many fine and “fine” media products) for stereoscopic support, and highlighting Canon’s weirdly expensive stereo camera lens that they’ve been selling for a while. Truly bizarre, and in my opinion very unlikely to go anywhere interesting.

The other kind of relevant thing is automatic stereo conversion of images. Apple did that thing where they showed image layers parallaxing inset in a rounded rectangle so there’s absolutely no way to judge what they were able to do, but it is the kind of thing that I’ve been suggesting they do instead of less-great mismatched iPhone lenses and the quality loss inherent in that approach.

Surely, many will dismiss any post-processed stereo, but it could theoretically work better for some. If they get stable generative fill some day we may see it for video, or at least stereo converted Live Photos. I’m not mad about that at all. I don’t have a burning desire to view media that way, but it’s much more practical for people buying these headsets.

The changes to iOS to move icons are good, but the dark mode icons all look kind of bad. Which is weird because I use Dark Mode on my iPhone 24/7, so I feel like I should prefer them. Odd to even think of such a thing but the icons all look so strangely ugly with the background color just swapped for black. The icons also look quite appalling in the color themes at the moment. It feels like the sort of thing you’d find on a Windows skinning site circa 2001. It’s too monochromatic, and flattens out everything instead of being an accent color. I don’t quite get the vibe, though I know the idea of doing something like this appeals to people. I’m not certain the execution will.

Concluding the video with the “Shot on iPhone. Edited on Mac.” after the last video was allegedly edited to some degree on both the iPad and Macs, is perhaps fitting, given the realities of Final Cut for iPad support (and managing files! Simple-ass files!), and is only notable for that reason alone.

Just a real mixed bag. Smeared with sloppy AI that feels as rushed, and as “check the box” as a lot of people were worried about. Like the product of a company that both learned a lesson from the Crush ad, and didn’t learn anything at all. As these things slowly roll out in betas “over the next year” I hope that people apply the necessary, probing criticism that can help guide Apple to make better decisions in the shipping products, and for 2025.

Category: text