My blog is made by a rube, if you will. This site is a static blog generator that’s basically a single 300-ish line Python script from 2014. I was “inspired” to make it when static site generators were all the rage and I figured it would help me with my Python skills, as Python is a language often used in VFX software. I don’t recommend people use any of my code, or write their own site like this. There are better ways to spend your time.

I’ve periodically written about the changes to my site over time, like when I made the jump from Python 2 to 3, and transitioned servers back in October of 2023. We’re overdue for an update. Especially as I have been tinkering quite a bit, and it happens to coincide nicely with ATP’s recent episode member’s-only episode on their own sites. I might as well start from the top.

Caddy serves the files. I had originally used Twisted on my old server, because it’s in Python, but I could never figure out how to get the certificates working in Twisted and Caddy just works. I don’t even have to know Go. I can’t recommend it highly enough if you’re looking for a simple solution to just serve files.

The python script that makes this whole mess is yakbarber.py, and then when I converted it to Python 3, I named it yakbarber3.py so I could run the two versions to make sure the output was the same (it was close enough).

It takes markdown files from /content converts them to HTML and uses mustache templates to wrap the HTML with the necessary headers, and such. Also to generate the RSS/Atom feed. The results went into /output. That’s more or less how it worked for over 10 years, and is basically how it continues to work. You can see the commits for everything as I worked through various parts of it over the past month.

The site settings live in another file and were imported with the imp module. Yes, that module is deprecated in Python 3 and it would generate a warning every time it ran, even though it would run successfully. I asked Gemini or Copilot, I can’t remember which and it suggested switching it out for importlib.util.module_from_spec() and it’s fine.

That’s another thing that’s changed in 11 years. I used to have to search StackOverflow for a problem that matched the problem that I had, or was close enough, and then try to kludge together something that fit my needs. I never figured out how to properly replace imp but I’m sure someone wrote about it somewhere. Now I can query LLMs that have paid StackOverflow and they synthesize something that more closely matches my situation.

They’ve been total horseshit at making things from scratch, and I don’t just blindly accept their output, but they do help me when I get stuck on something I don’t know. More importantly, I can look at their solution and then figure out there’s a better way to do this. I’m not “vibe coding” but it is more of a back and forth.

My feelings on LLMs are nuanced, especially where creativity is concerned. Being able to get me through a roadblock is not the same thing as “make my whole web site” which I think is a valueless proposition. Indeed, I did ask it to write a static blog generator using the same specs as my blog and it wasn’t what I wanted. I don’t see the appeal in just letting LLMs dictate the whole thing.

Case in point: I wanted to add support for yakbarber3.py to monitor for changes to the /content directory, or the mustache templates, and then regenerate the site automatically without me having to run the script. The solutions were to either use inotify outside of my script to run it when files were changed, or to use watchdog (a python module which would be inside the script itself) to monitor for changes. I decided on watchdog because I want to continue to refine what files are read and written to disk in the future, and this seemed like the best way to achieve that.

I used Copilot in VSCode — everyone’s raving about the thing so why not try it? It did a decent job constructing what I asked for. It would monitor those directories for changes, and only for the specified file types.

class ChangeHandler(FileSystemEventHandler):

def on_modified(self, event):

if event.src_path.startswith(contentDir) and (event.src_path.endswith('.md') or event.src_path.endswith('.markdown')):

print(f"Detected change in {event.src_path}. Re-running main()...")

main()

elif event.src_path.startswith(templateDir):

print(f"Detected change in {event.src_path}. Re-running main()...")

main()

def on_created(self, event):

if event.src_path.startswith(contentDir) and (event.src_path.endswith('.md') or event.src_path.endswith('.markdown')):

print(f"Detected new file {event.src_path}. Re-running main()...")

main()

elif event.src_path.startswith(templateDir) and (event.src_path.endswith('.html') or event.src_path.endswith('.xml')):

print(f"Detected new file {event.src_path}. Re-running main()...")

main()

I needed to refine the sleep interval between times it started, and I also wanted to make sure that a bunch of successive changes that happened quickly wouldn’t cause the main() loop to be triggered. Copilot suggested a debounce timer.

def schedule_main(self):

global debounce_timer

if debounce_timer is not None:

debounce_timer.cancel()

debounce_timer = threading.Timer(3.0, self.run_main) # 3-second debounce window

debounce_timer.start()

def run_main(self):

global is_running

if not is_running:

is_running = True

try:

main()

finally:

is_running = False

Like I could study reference materials about how to implement this, but again, I wouldn’t really know where to start or what I was trying to do without a lot of other programming experience that was not going to be relevant to the rest of my life. This tool doesn’t make me a professional programmer, it just helped me with a hobby project, and I know just enough about it to understand and edit it.

One big problem that I had not anticipated was that the script was original written to modify variables as they fell down through the functions. That meant that when watchdog would start the loop over that several variables wouldn’t be reset and would behave in an unexpected manner. Copilot was no use for debugging this. It suggested I put print statements everywhere, but it was entire blog posts, so that’s too much printing to be useful.

However, I correctly diagnosed that the problem was my poorly written code from my original script. I separated out the parts that were writing over data, and I also reduced the number of places that data was being sorted. That was tricky, like untangling cables from that box of cables that you 100% keep meaning to individually bundle.

I couldn’t have vibe-coded my way through this, but it was useful to be able to highlight a specific line and ask Copilot what the change I made would do, just to double check my work.

One of the recommendations Copilot provided —you can just ask for its “opinion” on things— was that I should asynchronously render the blog post pages (not the index or the RSS feed). This was my first time using Python’s async library so I got to see how my serial scripting turned into async scripting.

It’s mostly the same, but I get to use fun words like with lock and asyncio.gather. Instead of def I get to use async def. This was not as intimidating as I thought it was going to be, and I knew exactly where it would be applied.

There were a lot of changes that Gemini and Copilot suggested that I just didn’t feel like implementing. Gemini would just rewrite swaths of it to where the code was unrecognizable. Copilot had a few occasions where it barfed on a change (it started to reprint the imports all over again in the middle of the file).

Both of them really wanted me to move from mustache templating to jinja2. They don’t really “want” it, exactly. There’s a higher probability that someone recommended jinja2 templating, which means that I get to have that suggested. I saw plenty of people say this online in 2014 when I picked mustache over jinja2. Mustache templating suits my needs and is very easy.

Rejecting the change is simple, but it’s interesting that dogma about template preferences could steer someone who didn’t know what they wanted to jinja2. No shade no lemonade on jinja2, but it can be overkill if your templating needs are relatively simple.

Previously, I used a bash script named site_build.sh to run yakbarber3.py and then copy all the files from output and the template resources to the directory that actually serves the files. I don’t write the files my site generates to the directory that serves them because I want to reduce the chances that something will break and then my site won’t serve any files. Also I didn’t have the mime type and redirect set up correctly for joe-steel.com/feed so the old way I did it was to make a copy of the feed.xml file that just didn’t have a file extension. Very hacky.

This superstition about keeping site generation and site serving separate meant that I wanted to replace the bash script for the always-running, always-looping yakbarber3.py to monitor for changed files to copy over. Since I was already using watchdog to do that in the site generator script, it seemed natural for me to continue with that. Thus, I put together sync_to_serve.py.

import os

import shutil

import time

from watchdog.observers import Observer

from watchdog.events import FileSystemEventHandler

import fnmatch

SYNC_DIRECTORIES = {

"output/path": "server/path",

# Add more source-destination pairs as needed

}

FILE_PATTERNS = ["*.html", "*.css", "*.xml"]

class FileSyncHandler(FileSystemEventHandler):

def __init__(self, source_directory, destination):

super().__init__()

self.source_directory = source_directory

self.destination = destination

def on_modified(self, event):

if not event.is_directory and self.matches(event.src_path):

self.sync_file(event.src_path)

def on_created(self, event):

if not event.is_directory and self.matches(event.src_path):

self.sync_file(event.src_path)

def matches(self, src_path):

filename = os.path.basename(src_path)

for pattern in FILE_PATTERNS:

if fnmatch.fnmatch(filename, pattern):

return True

return False

def sync_file(self, src_path):

try:

relative_path = os.path.relpath(src_path, self.source_directory)

dest_path = os.path.join(self.destination, relative_path)

os.makedirs(os.path.dirname(dest_path), exist_ok=True)

shutil.copy2(src_path, dest_path)

print(f"Synced: {src_path} -> {dest_path}")

# Special case for feed.xml

if os.path.basename(src_path) == "feed.xml":

feed_dest_path = os.path.join(self.destination, os.path.dirname(relative_path), "feed")

shutil.copy2(src_path, feed_dest_path)

print(f"Synced: {src_path} -> {feed_dest_path}")

except Exception as e:

print(f"Error syncing {src_path}: {e}")

if __name__ == "__main__":

observers = []

for source_dir, destination_dir in SYNC_DIRECTORIES.items():

event_handler = FileSyncHandler(source_dir, destination_dir)

observer = Observer()

observer.schedule(event_handler, source_dir, recursive=True)

observer.start()

observers.append(observer)

try:

while True:

time.sleep(1)

except KeyboardInterrupt:

for observer in observers:

observer.stop()

for observer in observers:

observer.join()

This is significantly longer than the bash script, of course, but pretty straight forward in that it does a one-way copy, like the older script did. It just does it continuously.

The other thing that I’ve changed on the site is the CSS and some JavaScript. I hate JS. It’s this weird thing that looks sort of like python, but behaves in different ways. I had a very specific thing that I wanted to do, which was format the date and time displayed on each post to be localized, and also pleasant to look at. Gemini instantly kicked out the code I needed and I added it to my template.

function localizeDates() {

const dateElements = document.querySelectorAll('.date date');

dateElements.forEach(dateElement => {

const datetimeString = dateElement.getAttribute('datetime');

if (datetimeString) {

const date = new Date(datetimeString);

if (!isNaN(date)) {

const localizedString = date.toLocaleString(undefined, {

year: 'numeric',

month: 'long',

day: 'numeric',

hour: 'numeric',

minute: 'numeric',

});

dateElement.textContent = localizedString;

} else {

console.error("Invalid date format:", datetimeString);

}

}

});

}

// Call the function when the DOM is loaded

document.addEventListener('DOMContentLoaded', localizeDates);

That only took me a decade to get around to. It’s much better now.

Likewise the CSS problems on my site were always around. I had separate font-sizes for mobile and desktop. Sometimes people would reach out to tell me that my site was rendering incorrectly, for example when they used StopTheMadness in Safari. The developer, Jeff Johnson, helped me figure out what the problem was. I had never set:

<meta name="viewport" content="initial-scale=1.0">

So in a regular mobile Safari browser it had some weird scale which I had worked around, and now needed to undo that. It felt like a huge relief to clean that up.

Now that I had a site that could monitor for changes and regenerate itself without me having to sign in through SSH, I needed to make posting easier. One of the irritations with posting is that I have YAML front matter in my blog posts that needs to have certain information present.

Title: My Clever Title Here

Date: YYYY-MM-DD 24:00:00

Author: joe-steel

Category: text

There are optional YAML entries for Link which formats the post as a link-blog post with a permalink, and makes the header point to the original source. The other YAML is Image which sets an opengraph image to use for the post if I want one.

The date and time are the annoying part as the rest of it is just copy and paste. I have repeatedly made silly mistakes when I format the date and time just due to human error.

I don’t want the blog generation to write the date and time for me because I don’t want it to accidentally overwrite things in the files —right now it’s read-only when it works with the markdown.

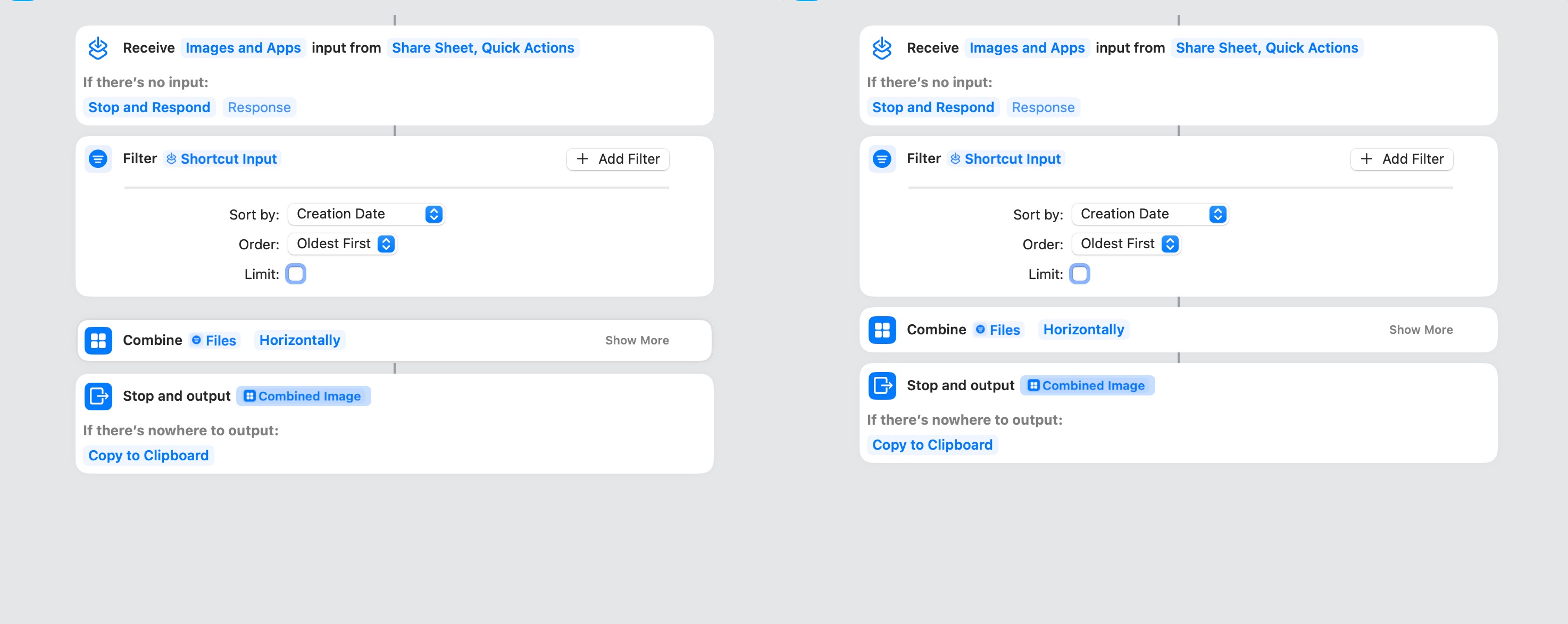

I do want this to be multi-platform so I can write posts when I’m not with my computer. That means I need iOS support. That means Shortcuts. Sigh.

That was what that whole post complaining about time zone conversion was about. I finally conquered it and it could produce the formatted date and time on command, but it was a pain. Also I couldn’t figure out how to easily do it for a file that I had already written. It was easy to write out this stub, but I didn’t want to do that when I started writing or the date and time would be off by the time I had finished.

That’s when I turned to Drafts. Greg Pierce does an amazing job with Drafts, but I use approximately 0.1% of its full potential. I had never successfully automated anything in it before.

Fortunately, Drafts can run JavaScript, and while I hate JavaScript, I know JavaScript can very easily handle the date logic. To protect all of the little babies, Apple doesn’t let us run JavaScript in Shortcuts unless it’s JS on an active page in Safari.

I also know that I don’t even have to write the JS. Just explain the logic to Gemini, or whatever. I also know JS can split the lines to turn the top line of the draft text into the title, and then everything else goes below as the body. It’s also amended so that it can handle the extra YAML, or if I run it again on a file that already has a header.

let options = { timeZone: 'America/Los_Angeles', year: 'numeric', month: '2-digit', day: '2-digit', hour: '2-digit', minute: '2-digit', second: '2-digit', hour12: false };

let now = new Date();

// Round to the nearest 5-minute interval

let minutes = now.getMinutes();

let remainder = minutes % 5;

if (remainder < 3) {

now.setMinutes(minutes - remainder);

} else {

now.setMinutes(minutes + (5 - remainder));

}

now.setSeconds(0, 0); // Reset seconds and milliseconds

let date = now.toLocaleString('sv-SE', options).replace(/:[0-9]{2}$/, ':00');

let title = draft.title;

let body = draft.content;

let lines = body.split('\n');

let yamlTitle = null;

let yamlDate = null;

let yamlAuthor = null;

let yamlCategory = null;

let yamlLink = null;

let yamlImage = null;

let nonYamlLines = [];

// Check for existing YAML-like lines

for (let i = 0; i < lines.length; i++) {

let line = lines[i].trim();

if (line.startsWith('Title: ')) {

yamlTitle = line;

} else if (line.startsWith('Date: ')) {

yamlDate = `Date: ${date}`; // Update Date

} else if (line.startsWith('Author: ')) {

yamlAuthor = line;

} else if (line.startsWith('Category: ')) {

yamlCategory = line;

} else if (line.startsWith('Link: ')) {

yamlLink = line;

} else if (line.startsWith('Image: ')) {

yamlImage = line;

} else {

nonYamlLines.push(lines[i]); // Keep all other lines, including empty ones

}

}

// Remove leading empty lines from nonYamlLines

while (nonYamlLines.length > 0 && nonYamlLines[0].trim() === '') {

nonYamlLines.shift();

}

// Construct the new YAML section

let newYaml = [];

if (yamlTitle) {

newYaml.push(yamlTitle);

} else {

newYaml.push(`Title: ${title}`);

}

if (yamlDate) {

newYaml.push(yamlDate);

} else {

newYaml.push(`Date: ${date}`);

}

if (yamlAuthor) {

newYaml.push(yamlAuthor);

} else {

newYaml.push(`Author: joe-steel`);

}

if (yamlCategory) {

newYaml.push(yamlCategory);

} else {

newYaml.push(`Category: text`);

}

if(yamlLink){

newYaml.push(yamlLink);

}

if(yamlImage){

newYaml.push(yamlImage);

}

// Combine YAML and non-YAML lines

let newBody = newYaml.join('\n') + '\n\n' + nonYamlLines.join('\n');

draft.content = newBody;

draft.update();

This took significantly less time than trying to get Shortcuts to do this. It does mean that I have to use Drafts if I want access to this, but if I’m on the go, I’d likely be writing a short post, which could be in Drafts. Then I save that to my /content folder in Dropbox, yakbarber3.py sees the file, and regenerates the site, which sync_to_serve.py copies to the directory that serves the static files. It’s a simple 5-ish step process with most of it automated.

The moment where all this worked was when I wrote my link-blog post yesterday. I was a few thousand feet in the air and it published. I even had a mistake, where there was an errant new-line that pushed the Link: YAML down a line and it processed it as a regular post. I fixed the file, and it just regenerated as the expected link-blog style post it was supposed to be.

In the future, I want to handle draft posts with status in the YAML for draft or published. Then it can generate the page so I can check formatting without it adding to the RSS or index.

I also want to have YAML defaults for certain tags, like author, and category. It is extremely unlikely that anyone else will be writing on my blog, or I’ll use a category other than text. This YAML is a legacy of my original export and import process where I used Pelican to get my very old Tumblr posts. It’s superfluous.

I also want to handle scheduled posts with the date YAML. Where it will hold the site generation step until the time stamp is reached. Sometimes I finish writing something at night but I know no one’s going to read it, and it’ll get buried under new posts in the morning. For that to work, I really can’t have human error in the stamps.

Mastodon integration would also be nice where the blog has its own account. I might use Robb Knight’s echofeed. I couldn’t even explain why I’m not using it already.

Lastly, I need to come up with a better system to handle images. Whenever there are images on this blog I have manually uploaded them to directories on my server and then copied the paths back to put into the blog posts. It’s a very annoying step. I don’t want to just throw a bunch of images into /images because that will just be a mountain of clutter. I can always make more YAML —more YAML, more problems. I could have named subdirectories in /images that use a relative path so I could see the images in draft previews, and count on them to be copied correctly. That creates two copies of all my images though.

The important thing is that I can keep screwing around with this. It is very satisfying when I get some part of this working the way I want it to, and that makes all the frustrations worthwhile.

Again, I don’t recommend anyone do any of this, or use anything that’s here. Use Ghost, or even another off-the-shelf platform that has support so you don’t have things standing in the way of you writing your blog. Think of all the blog posts I could have written if I wasn’t trying to make sure my mustache was asynchronous?